Global cybersecurity firm Palo Alto Networks has released research from its threat intelligence team, Unit 42, on how the increasing popularity of generative AI has led to a surge in scams themed around ChatGPT.

The study sheds light on the diverse tactics employed by scammers to scam users into sharing confidential information or installing malicious software. The research also provides concrete instances and case studies to demonstrate these methods.

Unit 42 looked at several phishing URLs that pretended to be the official OpenAI website. The scammers behind such schemes typically create counterfeit websites that closely resemble the official ChatGPT website, with the intention of tricking users into downloading malicious software or disclosing private, confidential information.

Additionally, scammers might use ChatGPT-related social engineering for identity theft or financial fraud. Even though OpenAI offers a free version of ChatGPT, scammers often mislead their victims into visiting fraudulent websites and paying for these services. For instance, a fake ChatGPT site could try to lure victims into providing their confidential information, such as credit card details and email address.

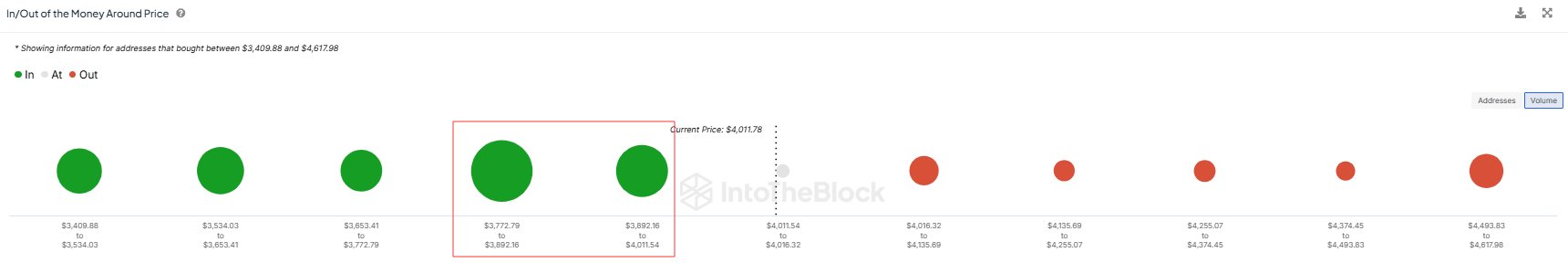

The researchers also noticed some scammers are exploiting the growing popularity of OpenAI for crypto frauds, for example scammers can abuse the OpenAI logo and Elon Musk’s name to attract victims to a fraudulent crypto giveaway event.

“The rise of generative AI has opened up new avenues for scammers to exploit unsuspecting users,” says Sean Duca, VP and Regional Chief Security Officer at Palo Alto Networks.

“We must educate ourselves and stay informed about the tactics scammers employ to protect ourselves and our sensitive information,” he says.

“Unit 42’s research highlights the need for a robust cybersecurity framework to safeguard against the growing threat landscape. As technology continues to evolve, so must our approach to cybersecurity.”

Key findings from the Unit 42 report include:

- The AI ChatGPT extension can add a background script to the victims browser that contains a highly obfuscated JavaScript. This Javascript calls the Facebook API to steal a victim’s account details, and it might get further access to their account.

- Between November 2022-April 2023, Unit 42 observed an increase of 910% in monthly registrations for domains related to ChatGPT.

- There were more than 100 daily detections of ChatGPT-related malicious URLs captured from traffic seen in the Palo Alto Networks Advanced URL Filtering system.

- In the same timeframe, the team observed nearly 18,000% growth of squatting domains from DNS security logs. Unit 42 observed multiple phishing URLs attempting to impersonate official OpenAI sites. Typically, scammers create a fake website that closely mimics the appearance of the ChatGPT official website, then trick users into downloading malware or sharing sensitive information.

- Despite OpenAI giving users a free version of ChatGPT, scammers lead victims to fraudulent websites, claiming they need to pay for these services.

Credit: Source link